In the first half of 2024, there were over 2,000 actively exploited vulnerabilities circulating online—which was only about 0.91% of the total number of published CVEs in 2023.

To put this into perspective, our research across customer environments found that a single cluster contains an average of 1,260 instances of exploited vulnerabilities, with 17 classified as critical and 863 as high. For organizations managing multiple clusters, tracking and remediating all these vulnerabilities can become an extremely overwhelming (and nearly impossible) task.

The ultimate goal is to direct the right issues to developers at the right time, with clear context and evidence to back those decisions. But the problem isn’t just the volume of vulnerabilities to do this effectively—it’s the lack of context that makes prioritization work well.

To accurately understand which vulnerabilities should be sent to development (and when), Application Security analysts need to answer key questions:

- Is this vulnerability truly executed in my environment, or just a theoretical risk?

- What are the actual preconditions required for exploitation?

- What is the function of the affected workload, and how critical is it to business operations?

A lot of vulnerability scanners can’t provide these answers. They generate overwhelming lists of known vulnerabilities without insight into their real-world impact - which ultimately means manually investigating these vulnerabilities, delaying remediation and burdening developers with unnecessary fixes.

At Sweet Security, we believe that vulnerability management needs to shift. That’s why we’re introducing a new approach: LLM-powered, runtime-driven vulnerability management—built to cut through the noise and provide actionable information that ensures security teams can focus on the threats that matter most.

Not All Vulnerabilities Are Created Equal

Consider two public-facing vulnerabilities:

- A privilege escalation vulnerability affecting a container with an inbound connection.

- A remote code execution (RCE) vulnerability with active inbound traffic and a direct path to sensitive data.

Both might have a high CVSS score, but one is significantly more dangerous in a specific environment. Sweet Security’s approach ensures that security teams understand the difference and respond accordingly.

Score & Prioritize Risks Based on How it will Impact Your Environment

Most scoring systems rely on a point-based accumulation of various factors, such as CVSS score, exploitation in the wild, runtime utilization, public-facing exposure, and workload exploitation. These factors are often assessed in isolation, however, and fail to capture the full context of the environment.

Sweet Security takes a different approach by leveraging LLMs to consider all these data dimensions in a unified manner. Instead of evaluating each runtime insight separately, Sweet’s new scoring mechanism considers them holistically within the specific environment and how it relates to the actual purpose of each microservice. This allows for a more precise and dynamic risk assessment.

Going back to the example above on privilege escalations, most tools would assign a higher score to a privilege escalation vulnerability if it has an inbound connection. However, privilege escalation vulnerabilities do not inherently require inbound connections to be exploited.

Traditional scoring methods overlook this nuance and it always leads to inaccurate prioritization. The Sweet Score, on the other hand, understands how vulnerabilities function within the environment—ensuring that security teams focus on what actually poses a threat instead of relying on static formulas.

A Playbook for Every Persona with LLM-Powered Remediation

Mitigating vulnerabilities isn’t just about fixing code—it requires collaboration across multiple teams. Our new Remediation Tab provides actionable steps tailored for different personas within the organization:

- Derisking – Quick wins to reduce immediate exposure.

- Mitigation – Workarounds to minimize exploitation potential.

- Remediation – Long-term fixes to fully resolve the issue.

This structured approach ensures security, DevOps, and IT teams have the guidance they need to act efficiently.

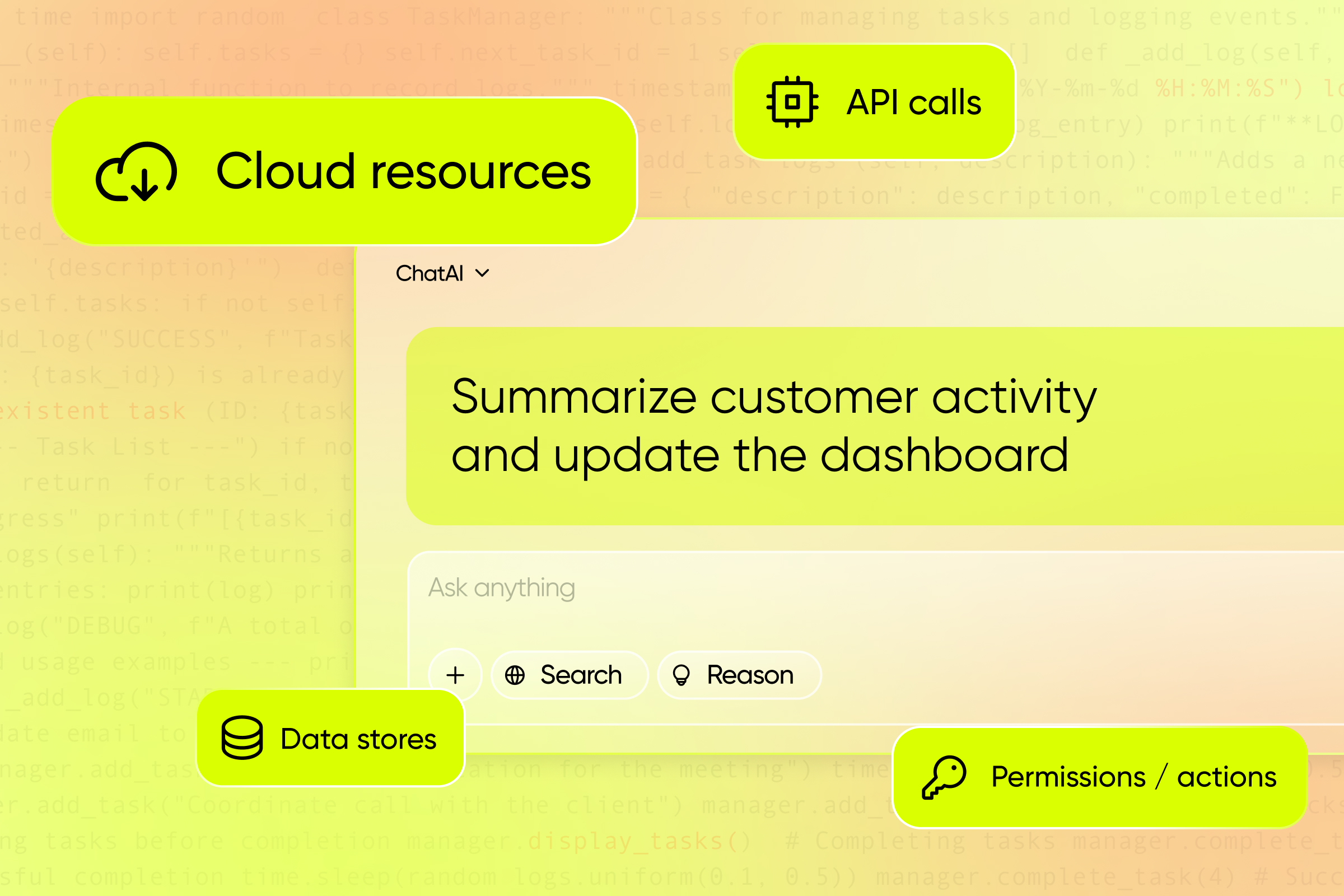

How Sweet’s LLM Supercharges Vulnerability Management

By integrating large language models (LLM) into vulnerability management, Sweet Security provides:

- External Knowledge Integration – Enriched vulnerability analysis with external data sources for a comprehensive risk view.

- Reasoning & Risk Assessment – LLMs analyze and explain risk factors, helping teams make informed decisions.

- Automated Prioritization – Reduced manual effort by streamlining risk evaluation, ensuring security teams focus on the vulnerabilities with the highest real-world risk.

From Noise to Clarity: The Future of Vulnerability Management

Vulnerability management is evolving, and the days of relying on static scores and endless scan reports are over. Sweet Security’s runtime-powered, LLM-driven approach provides the context, intelligence, and prioritization needed to cut through the noise and focus on real threats.

It’s time to move beyond theoretical risks and start managing vulnerabilities based on how they behave in your actual environment.

Ready to see how Sweet Security is transforming vulnerability management? Learn more by booking a demo.

.png)