Build an AI-BOM

Get a complete bill of materials for your AI ecosystem. Track every model (public or fine-tuned) and dependency with full visibility into origin, version, and risk

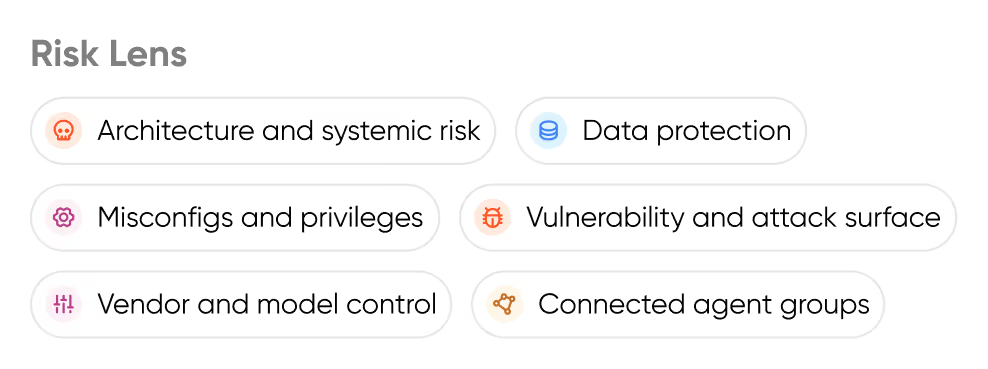

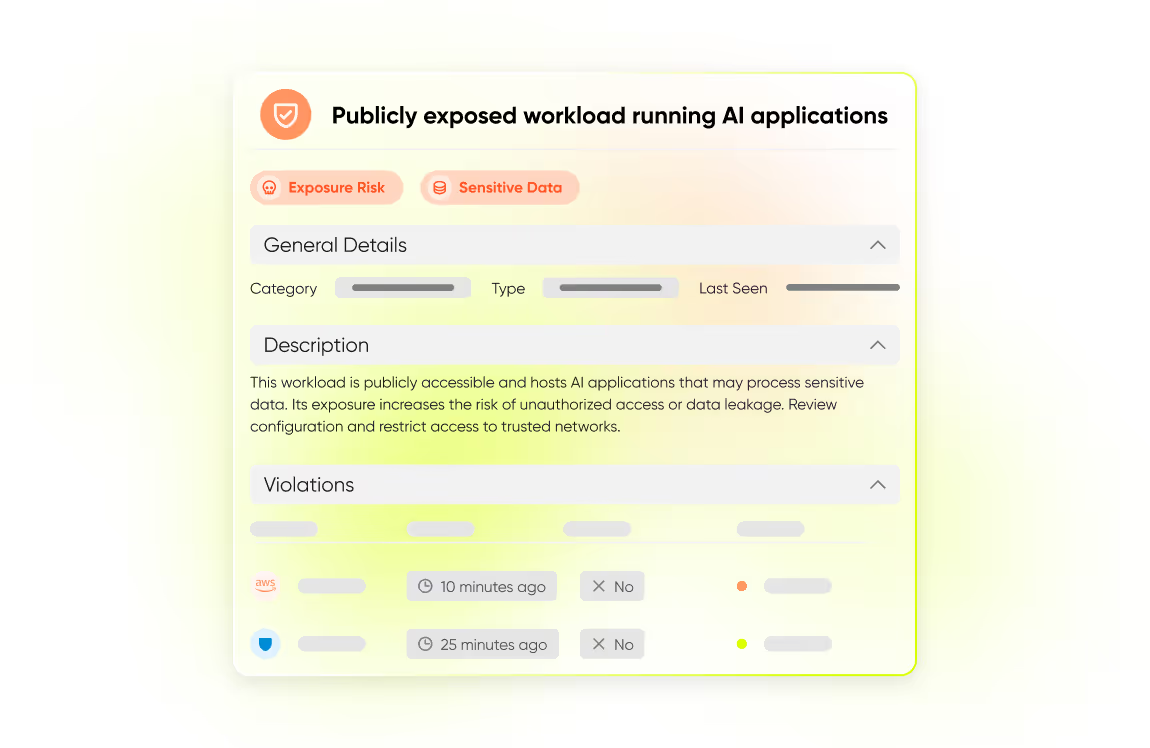

Strengthen Your AI Posture (AI-SPM)

Continuously monitor AI components for misconfigurations, exposed endpoints, vulnerabilities and policy violations.

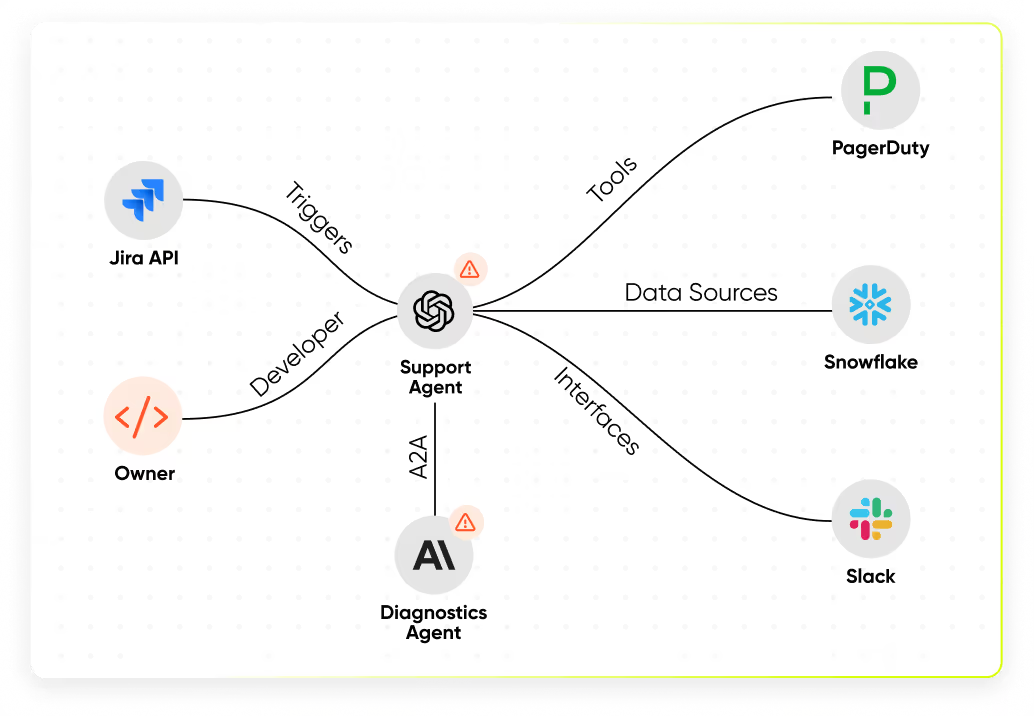

Discover Every Agent

Instantly detect all AI agents running in your environment, including shadow or unmanaged ones

Understand What Each Agent Does

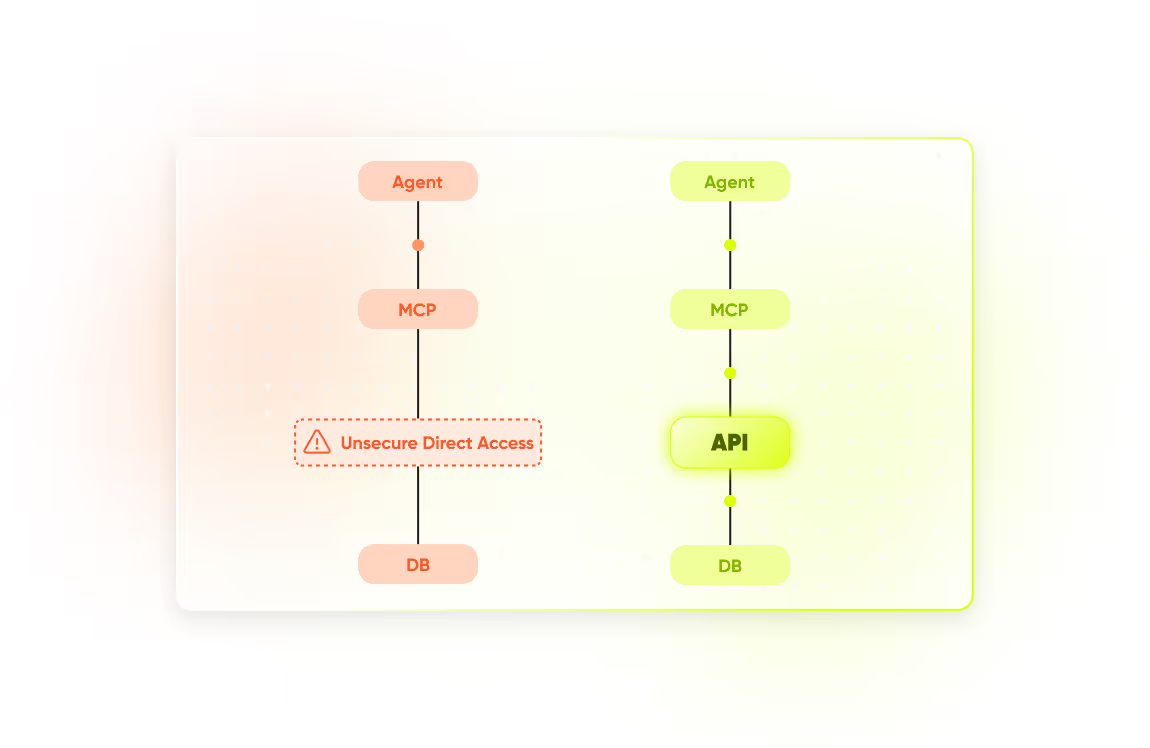

Trace every action and uncover intent across timelines and workflows. Assess the risk of your architecture, whether agents access data sources directly or via secured APIs

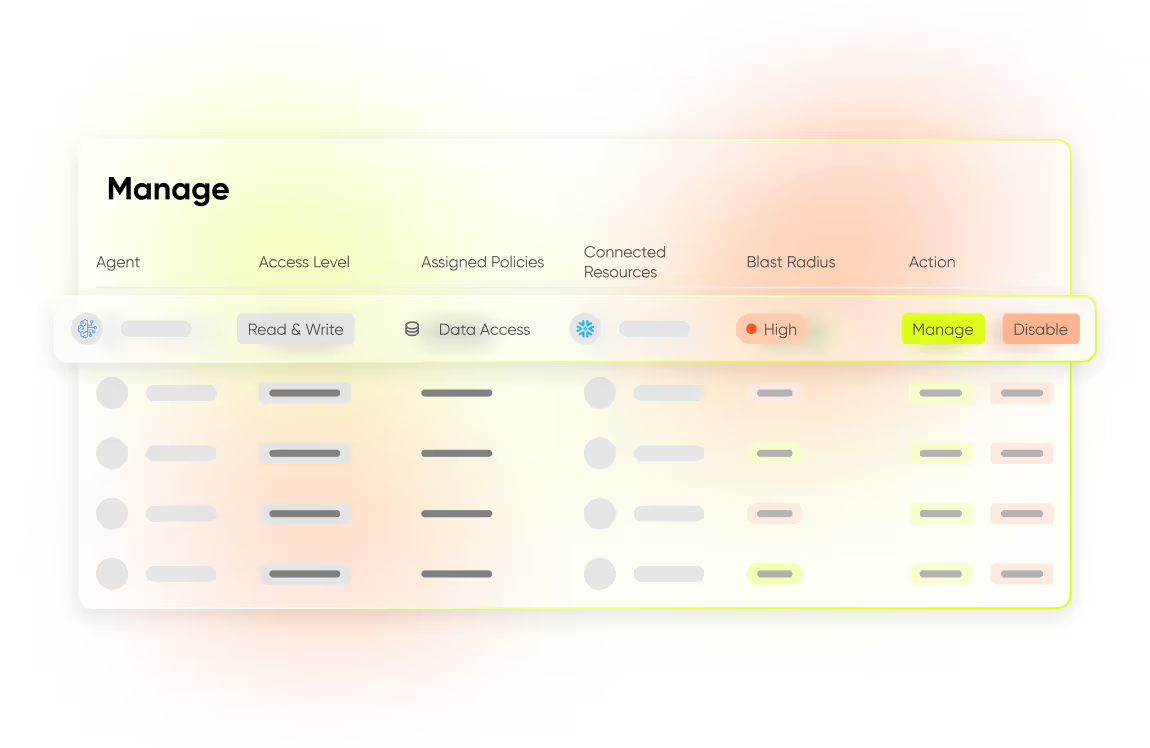

Manage Permissions, Access Control and Auditing

Ensure agents operate with minimal permissions. Reveal the blast radius of attacks on every agents and allow for policy enforcement

Red Teaming

Test the behavior of your agents under adversarial conditions to expose vulnerabilities before attackers do.

AIDR (AI Detection & Response)

Detect and block attacks on your AI agents, from prompt injections to hallucinations. Sweet funnels AI agent traffic through its AI Gateway to analyze the prompts and block malicious operations, making it easy to set up guardrails and policies. Sweet also brings its renowned behavioral baseline to AI agents to detect deviations and unexpected workflows.