Why the sweet spot in security is where cloud workloads and AI agents are protected together - based on what’s executing right now.

What if securing your cloud infrastructure and protecting your AI applications were… the same problem?

That question keeps coming up when I'm talking with security leaders right now. At first, it sounds a little crazy, maybe even wrong.

Cloud security is one thing. AI security is another thing. Different tools. Different teams. Different risks. Right?

But let’s look closer.

The Assumption We Built Our Tools Around

For years, we've formed our security thinking around a clean separation. Cloud security over here, focused on infrastructure, containers, and workloads. AI security over there, focused on models, prompts, and agent behavior.

We built tool categories around this split: CNAPPs for cloud, specialized platforms for AI agents and LLMs. Different vendors, different dashboards, different teams.

It made sense at the time. The problems felt distinct enough to need different approaches.

But here's what we missed: AI doesn't live next to the cloud. AI runs on the cloud.

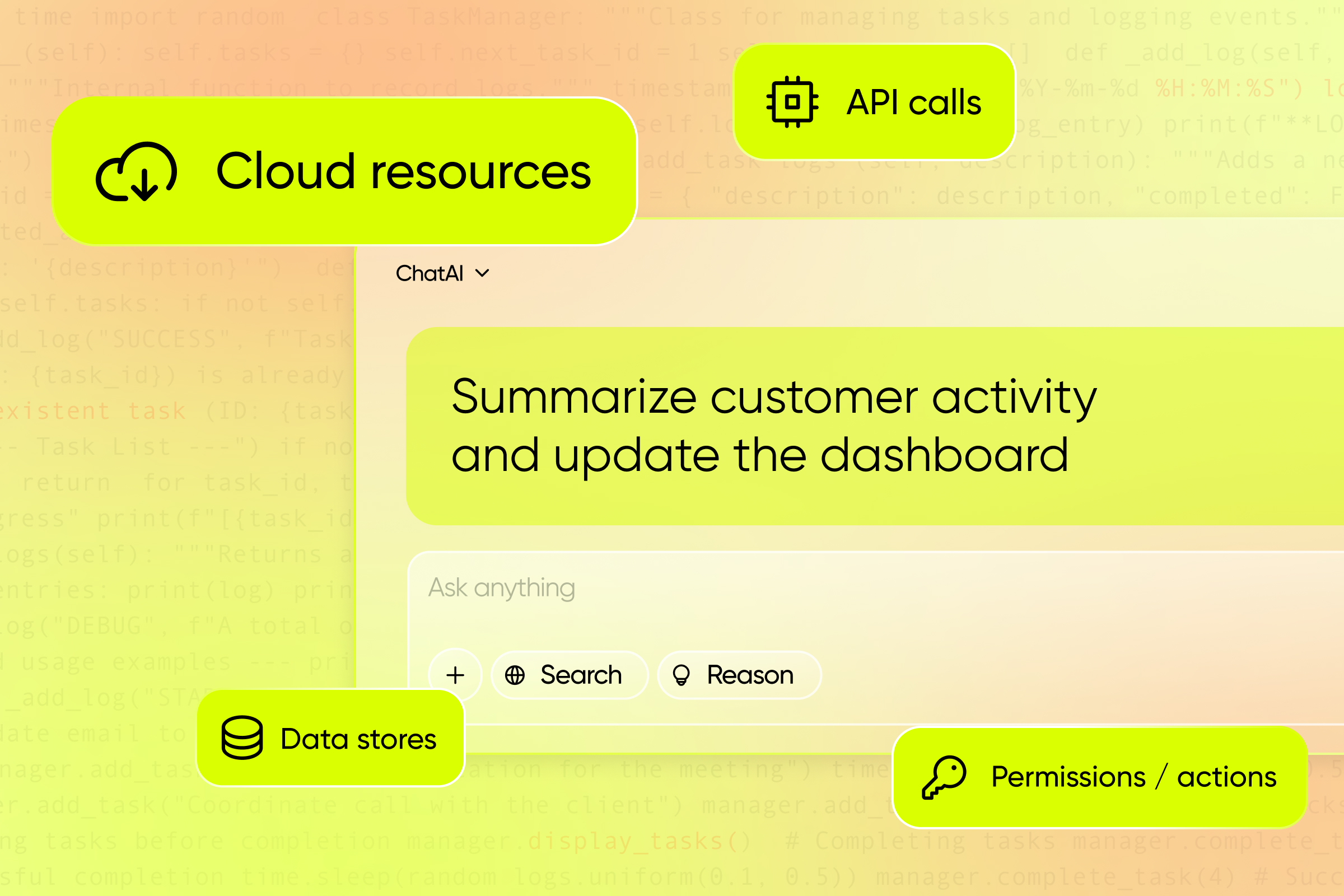

It uses the same identities. The same APIs. The same data stores. The same workloads and compute resources. The same network paths an attacker would follow.

The only real difference is the behavior.

Traditional applications behave in ways we can predict. AI applications make decisions on their own at runtime, often using tools, identities, and data that are hard to fully track or control.

And that's where the tension starts.

Where Our Current Models Break

Most cloud security tools were built to answer static questions:

- What's misconfigured?

- What policy is violated?

- What could be vulnerable?

They scan at build time, check against benchmarks, and generate findings based on theoretical risk.

Meanwhile, most AI security tools focus only on prompts, responses, or model behavior, without seeing the full cloud environment those systems depend on. They look at what an AI agent says or generates, but miss how it moves through your infrastructure, what APIs it calls, what data it accesses, which identities it assumes.

So we end up with blind spots on both sides.

AI security without cloud context misses real attack paths. Cloud security without runtime AI visibility misses new behaviors entirely.

Here's the uncomfortable truth: attackers don't care how we organize our tools.

They don't check whether they're exploiting "cloud infrastructure" or "AI agents." They just follow execution paths. They move through identities, abuse APIs, and exploit whatever is actually running - whether that's human-written code or agentic AI makes no difference to them.

The Convergence: Security Becomes a Runtime Problem

I believe these two types of security are converging. That cloud and AI security are becoming the same runtime problem.

Because once you start asking the right question, "What is my cloud doing right now, and should it be allowed to?", everything looks different.

You stop splitting your view between traditional infrastructure and AI applications. You start seeing them through the same lens: runtime behavior.

- What's executing right now?

- What identities is it using?

- What data is it accessing?

- What APIs is it calling?

- Should this be allowed?

When you ask these questions, a container running traditional code and an AI agent making autonomous decisions start to look remarkably similar. They're both workloads. They both use identities. They both access data. They both operate in real time.

The difference isn't what they are. The difference is how they behave - and whether that behavior is expected or risky.

Finding the Sweet Spot

Now things get really interesting!

What if there was a way to secure traditional cloud applications and AI agents from the same starting point: runtime behavior?

What if you didn't need separate tools, separate teams, separate consoles to protect your infrastructure and your AI applications?

What if you could see shadow AI spinning up in the same view where you track containers and serverless functions? What if you could apply runtime guardrails to agentic applications the same way you detect anomalies in traditional workloads?

That's what we call the Sweet Spot.

The Sweet Spot is where traditional cloud security and AI security converge, secured together, with the same runtime intelligence, from one platform.

Not a traditional CNAPP trying to bolt on AI security as an afterthought.

Not an AI security point solution that ignores your cloud infrastructure.

The Sweet Spot is where both are seen clearly, protected consistently, and understood through the lens of what's actually executing right now.

What Changes When You Find It

When you start from runtime behavior, everything shifts:

You can focus on what's exploitable, not theoretical. Instead of drowning in 10,000 CVE findings where 99% don't matter in your specific environment, you see what's reachable, what's exposed, what's actually running, and what an attacker could genuinely exploit.

You can discover the unknown. Shadow AI spinning up without oversight? Containers you didn't know existed? Autonomous agents making calls you never authorized? Runtime visibility catches them all, because they're executing in your environment.

You can apply guardrails where behavior moves off course. AI agents don't wait for approval, they act in milliseconds. But if you're watching runtime behavior, you can stop risky actions as they happen, not after the damage is done.

You can consolidate without losing flexibility. One platform for workloads, identities, data, and AI agents. But modular enough to deploy what matters most to you right now—whether that's vulnerability prioritization, AI guardrails, detection and response, or all of it together.

The New Question for the AI Era

For years, we've been asking: "Is this cloud secure?"

That question led us to build scans, policies, and compliance checks. Static questions for static systems.

But in the AI era, the question changes: "What is my cloud doing right now, and should it be allowed to?"

That question leads somewhere different. It leads to runtime intelligence. It leads to real-time control. It leads to seeing traditional applications and AI agents the same way - as behaviors that need to be understood, monitored, and protected.

It leads to the Sweet Spot.

I'm excited about this shift. Not because the technology is flashy (though it is), but because it finally gives security teams a chance to stop playing catch-up, to stop managing cloud security and AI security as separate, siloed problems, to stop drowning in noise and start focusing on real risk.

To find the place where it all comes together.

That's the Sweet Spot. And we're building it at Sweet Security.

Want to experience the Sweet Spot and see what runtime intelligence looks like when it secures both traditional cloud and AI applications? Visit us at RSAC for a special tasting, just across the street from Moscone.