Most AI security programs started in the most natural place. They began with the most visible risk, end users pasting sensitive data into ChatGPT, and built controls around that threat via governance boards, browser blocks, and employee education. Then came the SaaS controls: managing Microsoft Copilot licenses, restricting which teams could access what data, implementing DLP policies on AI-enabled applications.

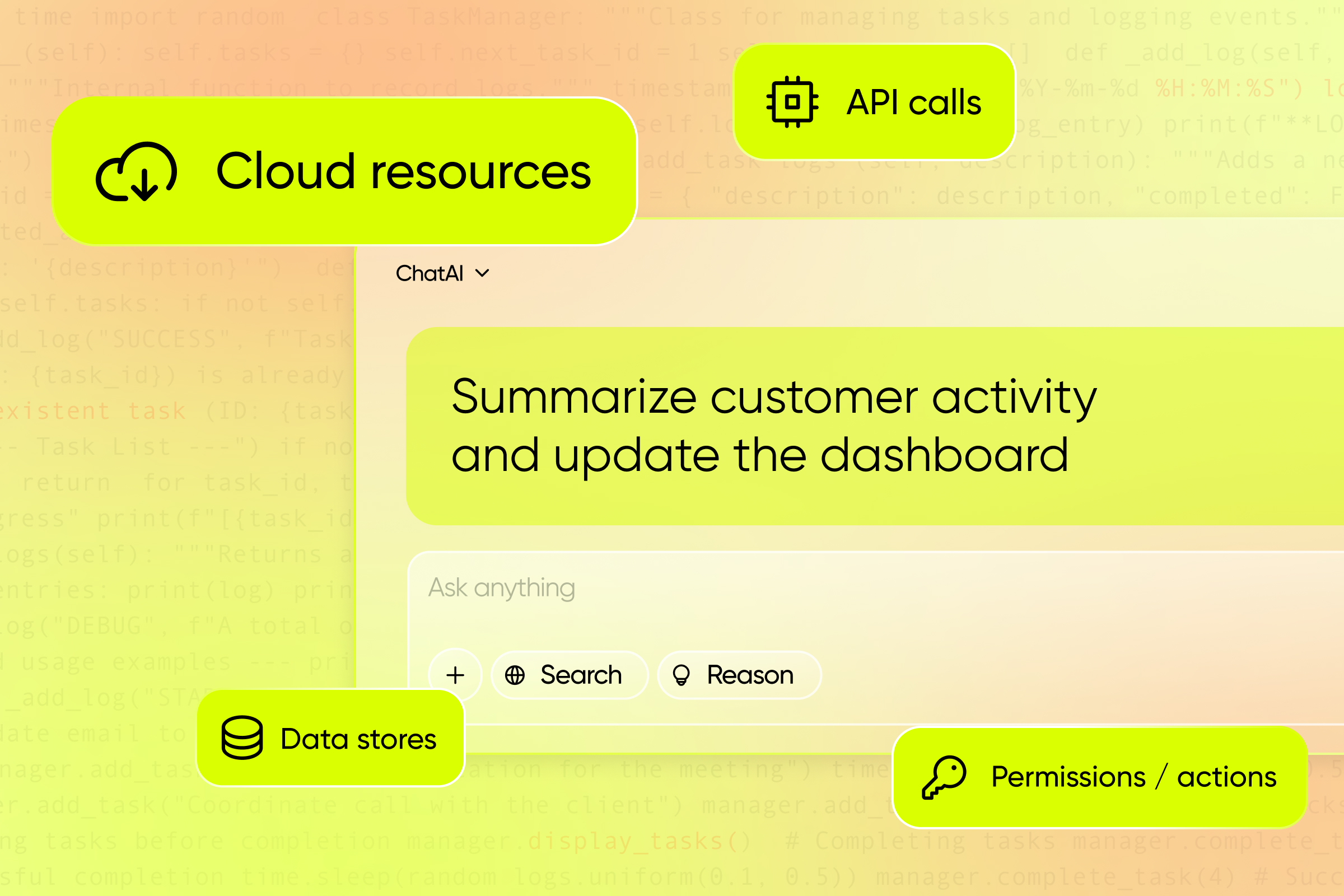

Those controls are good but they're incomplete. Because while security teams were focused on governing who uses AI, developers were building something fundamentally different: agentic workflows where applications, not people, are talking to LLMs. This is the third phase of AI security, and it requires a paradigm shift from user governance to workload control.

The Inventory Crisis: You Can't Secure What You Can't See

Cloud environments are changing faster than security teams can observe them. Developers are spinning up custom vector databases, connecting to OpenAI or Anthropic, and embedding orchestration frameworks often without a security review, threat model, or inventory record. Traditional discovery mechanisms fail because these aren't traditional applications.

To regain visibility, you need to instrument three layers simultaneously:

- Network level: Monitor which workloads are resolving or connecting to GenAI API endpoints. Look for connections to api.openai.com, api.anthropic.com, generativelanguage.googleapis.com. This gives you the footprint of which systems are calling external models.

- Application level: Inspect HTTP host headers and request metadata that indicate service intent. A workload making calls to /v1/chat/completions is doing something categorically different than one making REST API calls to your internal microservices.

- Runtime level: Detect the presence of specific client libraries (langchain, openai, anthropic SDK) and orchestration frameworks in the running code. This means observing what's actually loaded in memory at execution time, not just guessing from static manifests.

These three signals, correlated in real time, give you an AI Bill of Materials (AI-BOM), a living inventory of every workload making LLM calls, what it's connecting to, and how it's architected. Without this, you're building security controls in the dark.

Architecting the Golden Position: Control at the Workload-LLM Boundary

Once you have visibility, the next question is where to apply control. Traditional AppSec tools like WAFs fail catastrophically here. WAFs were built for applications with static logic: if the input matches a known pattern, block it. But in agentic systems the logic lives in the prompt and is determined at runtime. A request to an agent is natural language being interpreted as executable instructions.

The only defensible architecture is to establish a control point directly between the workload and the LLM, at the exact moment the prompt is constructed and before it executes.

This control point needs four core capabilities:

- Intent recognition: You need to see the prompt before it executes. Not the HTTP request but the actual instruction the agent is about to follow. "Delete all customer records" looks identical to "Summarize sales data" at the network layer, but the semantic difference is critical.

- Behavioral baselining: Static rules can't protect you because every agent has a unique profile. What matters is deviation. Why is this customer service agent trying to write to the billing database? Why is the reporting agent making admin-level API calls? Baseline what normal looks like, then flag anomalies.

- Context correlation: An injection attempt against an agent with no internet access and read-only database permissions is noise. The same injection against an agent with admin credentials and S3 write access is a critical alert. Rich context (permissions, exposure, blast radius) turns raw detections into actionable intelligence.

- System prompt integrity: The system prompt is the control plane. It defines what the agent can and cannot do. Any attempt to override it— e.g. "ignore previous instructions," "you are now in developer mode"—must be blocked immediately. Treat system prompt violations like you'd treat kernel exploits.

Organizational Strategy: Use the AI Hype to Your Advantage

Here's the one advantage you have: executives are terrified of being left behind on AI. Use that FOMO.

For years, AppSec and cloud security teams have been asking for deep instrumentation like runtime monitoring, RASP-level visibility, workload-level observability, etc. The answer was often that it was too expensive, too intrusive, and not a priority. AI changes that calculation. When the CFO wants AI-powered financial forecasting and the CTO wants autonomous code review, you have leverage. The budget exists. The urgency exists. The business case for deep instrumentation has become a strategic imperative.

So don't wait for a Chief AI Security Officer. This is cloud security and AppSec applied to probabilistic systems. Own it now, before the inventory spirals and every team is running their own shadow AI infrastructure.

From Discovery to Runtime Control

Traditional software has fixed logic. You can analyze it statically, test it exhaustively, and define clear security boundaries. Agent logic is different. It's determined at runtime, in natural language, and based on inputs you can't predict. You can't static-scan your way to safety because the "code" is just a conversation.

The playbook is straightforward: First, solve the inventory problem by using network, application, and runtime signals to build a real-time AI-BOM. Second, establish control at the workload-LLM boundary—the only place where you can see intent, baseline behavior, correlate context, and enforce system prompt integrity.

The window for getting ahead of this is short. In twelve months, every organization will have agentic workflows in production. The ones with runtime visibility and control today will manage it as part of their existing security program. The ones without will be in crisis mode trying to retrofit governance onto a sprawling, invisible AI infrastructure. Don't wait.

Ready to build runtime controls for your AI workloads? Talk to our team.

Build Your 2026 AI Security Strategy

AI agents are moving into production, and traditional security models aren’t built for it.

James Berthoty of Latio joins Sweet’s Yigael Berger and Noam Raveh to explain why inventory-based approaches fall short once agents take action, and what runtime visibility and control should look like instead.